7-MonteCarlo Sampling¶

OpenJij implements Simulated Annealing (SA). If we keep tempereture constant, it is possible to sample spin sequences from the canonical distribution at this temperature.

In the following, we deal with the fully-coupled ferromagnetic ising model.

By dividing the energy by the system size \(N\), we normalize the Hamiltonian to about the same size \(N\). In addition, we choose \(J=-1\).

[1]:

# import libraries

import openjij as oj

import numpy as np

import matplotlib.pyplot as plt

# set sampler

sampler = oj.SASampler(num_reads=100)

# set fully_connected problem

def fully_connected(n):

h, J = {}, {}

for i in range(n-1):

for j in range(i+1, n):

J[i, j] = -1/n

return h, J

# set h, J

h, J = fully_connected(n=500)

Let us compute the magnetization at each temperature define below.

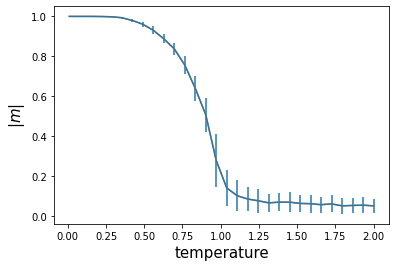

The closer this value is to 1, the more aligned spins are (ferromagnetic). On the other hand, the closer it is to 0, the more uneven spins are. If we perform the calculations with OpenJij at a constant temperature, we find that the magnetization value approaches 0 when the temperature is around 1.0. This is due because spins tends to fall apart as the temperature rises.

[2]:

# set a list of temperature

temp_list = np.linspace(0.01, 2, 30)

# compute magnetization and these standard deviation

mag, mag_std = [], []

for temp in temp_list:

beta = 1.0/temp

schedule = [[beta, 100]]

response = sampler.sample_ising(h, J, schedule=schedule)

mag_list = [np.abs(np.mean(state)) for state in response.states]

mag_std.append(np.std(mag_list))

mag.append(np.mean(mag_list))

[3]:

# visualize result

plt.errorbar(temp_list, mag, yerr=mag_std)

plt.plot(temp_list, mag)

plt.xlabel('temperature', fontsize=15)

plt.ylabel(r'$|m|$', fontsize=15)

plt.show()

Binder cumulant¶

[5]:

# calculation of U4

def u_4(states):

m = np.array([np.mean(state) for state in states])

return 0.5 * (3-np.mean(m**4)/(np.mean(m**2)**2))

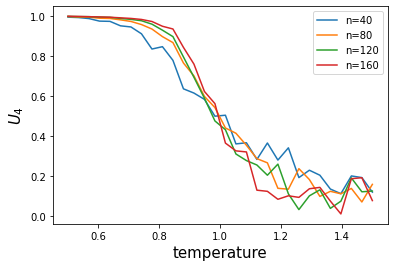

We defer to the statistical mechanics textbook for details. This quantity is close to 1 for ferromagnetism, where the magnetization approaches 1, and 0 for paramagnetism, where the magnetization approaches 0. Furthermore, the phase transition point is known to take a value independent of the system size. Therefore, we can perform the numerical experiments as described above for several system sizes and find that the point where the graph of \(U_4\) intersects at a single point is the phase transition point.

[6]:

# set a list of system size

n_list = [40, 80, 120, 160]

# set a list of temperature

temp_list = np.linspace(0.5, 1.5, 30)

# set sampler

sampler = oj.SASampler(num_reads=300)

u4_list_n = []

for n in n_list:

# make instance

h, J = fully_connected(n)

u4_temp = []

for temp in temp_list:

beta = 1.0/temp

schedule = [[beta, 100 if temp < 0.9 else 300]]

response = sampler.sample_ising(h, J,

schedule=schedule, reinitialize_state=False,

num_reads=100 if temp < 0.9 else 1000

)

u4_temp.append(u_4(response.states))

u4_list_n.append(u4_temp)

[7]:

# visualize results

for n,u4_beta in zip(n_list,u4_list_n):

plt.plot(temp_list, np.array(u4_beta), label='n={}'.format(n))

plt.legend()

plt.ylabel('$U_4$', fontsize=15)

plt.xlabel('temperature', fontsize=15)

plt.show()

There is variation in the data due to insufficient statistics. However, we can see that the four system sizes are intersected at a single point at a temperature near 1.0, which is roughly the phase transition point. The estimation of the phase transition point with the Binder cumulant is a popular method used at the forefront of numerical analysis.

Of course, academic studies need to be done diligently, not only obtain sufficient statistics, but also to evaluate these errors (computation of error bars). As this calculation is limited to and overview, accurate error evaluation and more is omitted.

Conclusion¶

We introduced a method of MonteCarlo sampling with annealing. We show an example of a phase transition in statistical mechanics. OpenJij can be applied in a variety of ways, depending on our ideas.